advertisement

advertisement

In APAC, AI adoption is accelerating from the ground up, driven by connected consumers and tech-savvy youth. Supported by strong investment, CEO-led strategies, and rapid digital growth, the region is becoming the world’s most dynamic AI proving ground.

|

For cybersecurity leaders, as AI adoption accelerates, it not only boosts productivity but also transforms how cyber threats are created and automated.

Kaspersky experts outline how the development of AI is reshaping the cybersecurity landscape in 2026. LLMs are influencing defensive capabilities while simultaneously expanding opportunities for threat actors.

1. Deepfakes are becoming mainstream, and awareness will continue to grow. Companies are increasingly addressing synthetic content risks and training employees to avoid falling victim. As the volume and formats of deepfakes expand, awareness is rising among both organisations and consumers. Deepfakes are now a consistent part of the security agenda, requiring systematic training and internal policies.

2. The quality of deepfakes will improve through better audio and a lower barrier to entry. Deepfakes already have high visual quality, with audio realism being the next frontier. At the same time, simpler tools now enable non-experts to create mid-quality deepfakes in just a few clicks. As a result, the average quality continues to rise, creation becomes accessible to a broader audience, and these capabilities will inevitably continue to be leveraged by cybercriminals.

3. Efforts to develop a reliable system for labelling AI-generated content will continue. There are still no unified criteria for reliably identifying synthetic content. For this reason, new technical and regulatory initiatives aimed at addressing the problem are likely to emerge.

4. Online deepfakes will continue to evolve but remain tools for advanced users. Real-time face and voice swapping is improving but still requires advanced setup. Mass adoption is unlikely, yet targeted risks will rise as realism and virtual camera manipulation make such attacks more convincing.

5. Open-weight models will approach the capabilities of top closed models in many cybersecurity-related tasks, which will create more opportunities for misuse. Closed models provide stronger safeguards, while open-source models are quickly catching up without comparable restrictions. This is blurring the line between the two and enabling both to be used for malicious purposes.

6. The line between legitimate and fraudulent AI-generated content will become increasingly blurred. AI can now generate professional-looking scam content, while brands are normalising synthetic visuals. As a result, distinguishing real from fake will become even more challenging, both for users and for automated detection systems.

7. AI will become a cross-chain tool in cyberattacks and be used across most stages of the kill chain. Threat actors are already using LLMs to code, build infrastructure, and automate operations. As capabilities advance, AI will support more attack stages, from preparation and communication to vulnerability probing and deployment. Attackers will also hide AI traces, complicating analysis.

8. AI will become a more common tool in security analysis and influence how SOC teams work. Agent-based systems will automate scanning, vulnerability detection, and context gathering, reducing manual work. Specialists will shift from data search to decision-making, while security tools move toward natural-language interfaces driven by prompts.

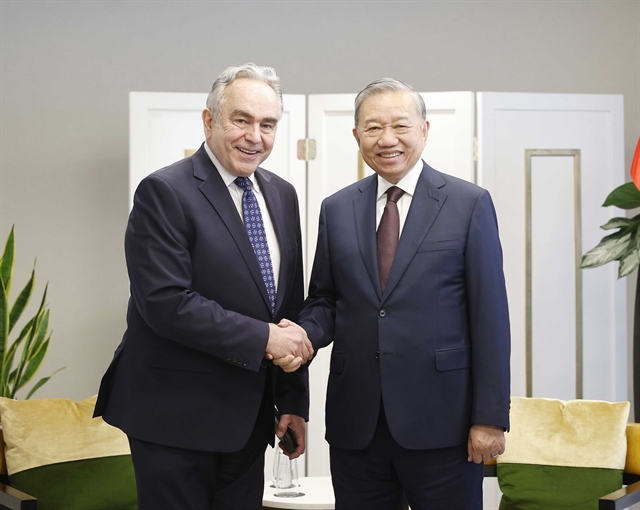

“Asia Pacific is setting the global pace for AI adoption, with consumers and enterprises advancing faster than any other region. This momentum is creating tremendous opportunity but also redefining how cyber threats emerge and scale. As businesses navigate this shift, they can rely on Kaspersky’s more than 20 years of expertise to strengthen their defences,” says Adrian Hia, Managing Director for Asia Pacific at Kaspersky.

To protect their AI-driven transformation, Kaspersky offers the following recommendations for APAC organisations:

• Keep software updated to reduce the exploitation of known vulnerabilities.

• Limit public exposure of remote desktop services and enforce strong authentication.

• Use advanced security solutions, including Kaspersky Next, to detect and respond to complex threats.

• Apply up-to-date Threat Intelligence to monitor attacker tactics and techniques.

• Maintain isolated, regularly tested data backups.